When it comes to residential property valuation, Automated Valuation Models (AVMs) have a lurking problem. AVM testing is broken and has been for some time, which means that we don’t really know how much we can or should rely on AVMs for accurate valuations.

Testing AVMs seems straightforward: take the AVM’s estimate and compare it to an arm’s length market transaction. The approach is theoretically sound and widely agreed upon but unfortunately no longer possible.

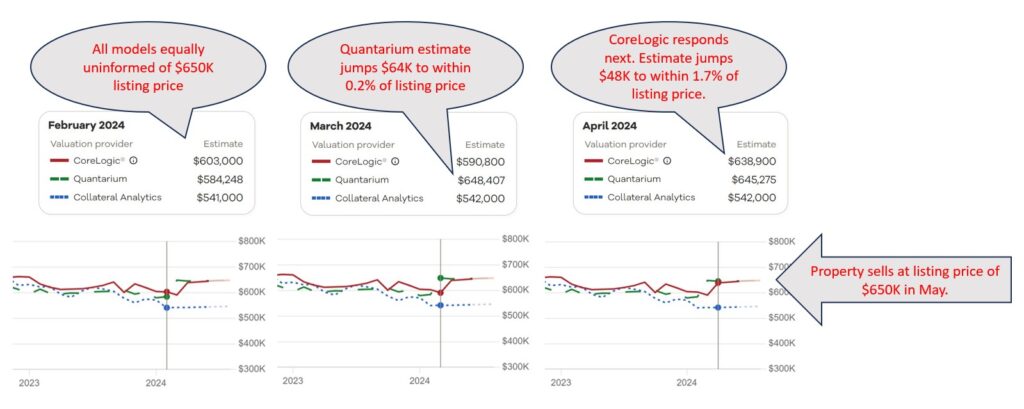

Once you see the problem, you cannot unsee it. The issue lies in the fact that most, if not all, AVMs have access to multiple listing data, including property listing prices. Studies have shown that many AVMs anchor their predictions to these listing prices. While this makes them more accurate when they have listing data, it casts serious doubt on their ability to accurately assess property values in the absence of that information.

All this opens up the question: what do we want to use AVMs for? If all we want is to get a good estimate of what price a sale will close at, once we know the listing price, then they are great. However, if the idea is to get an objective estimate of the property’s likely market value to refinance a mortgage or to calculate equity or to measure default risk, then they are… well, it’s hard to say. Current testing methodology can’t determine how accurate they are.

But there is promise on the horizon. After five years of meticulous development and collaboration with vendors/models, AVMetrics is proud to unveil our game-changing Predictive Testing Methodology (PTM™), designed specifically to circumvent the problem that is invalidating all current testing. AVMetrics’ new approach will replace the current methods cluttering the landscape and finally provide a realistic view of AVMs’ predictive capabilities.1

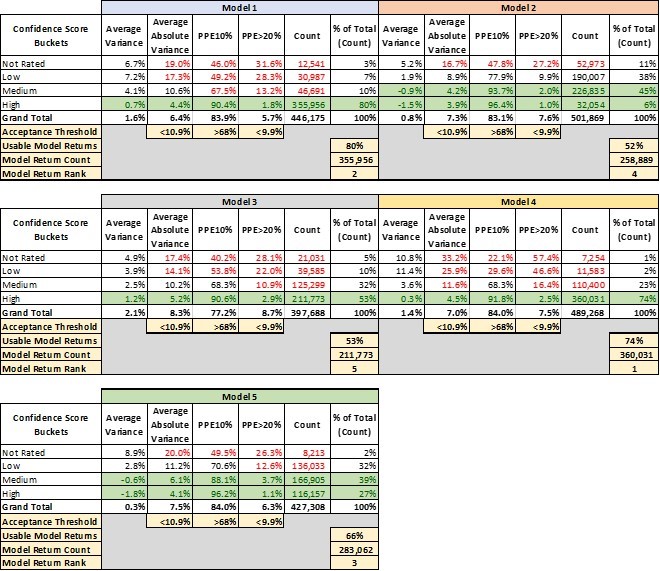

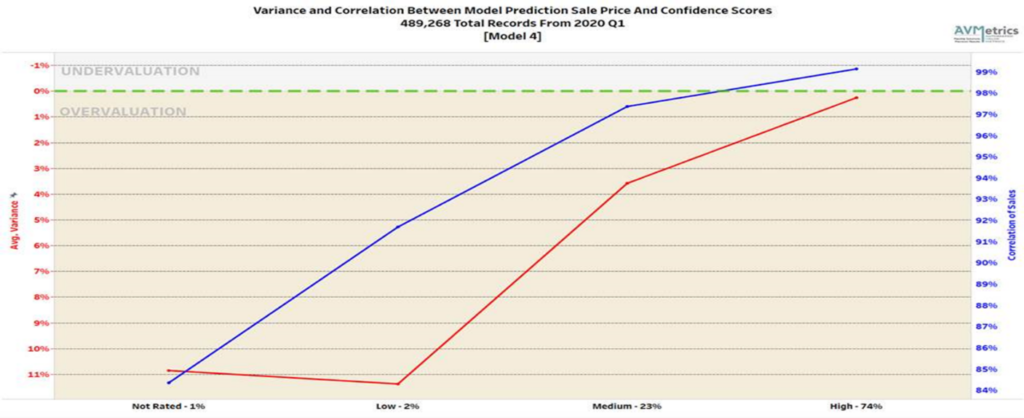

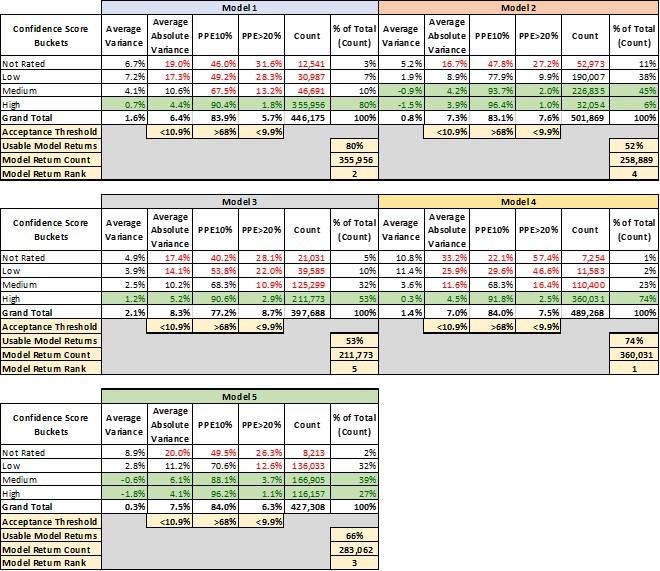

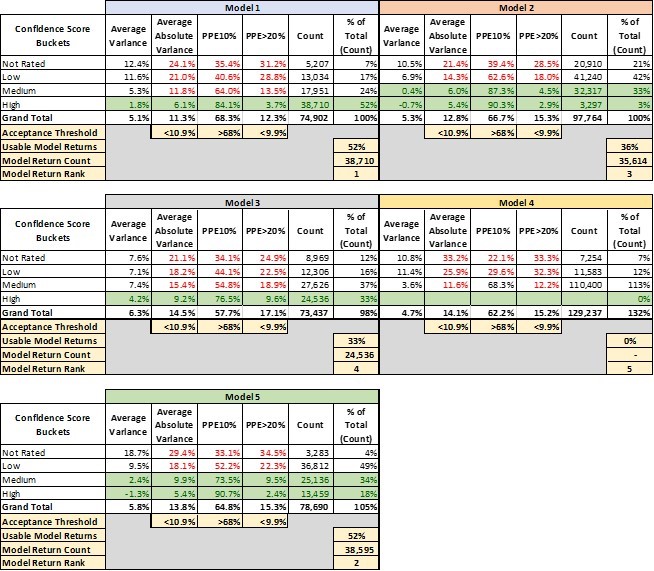

At the heart of PTM™ lies our extensive Model Repository Database (MRD™), housing predictions from every participating AVM for every residential property in the United States – an astonishing 100 to 120 million properties per AVM. With monthly refreshes, this database houses more than a billion records per model and thereby offers unparalleled insights into AVM performance over time.

But tracking historical estimates at massive scale wasn’t enough. To address the influence of listing prices on AVM predictions, we’ve integrated a national MLS database into our methodology. By pinpointing the moment when AVMs gained visibility into listing prices, we can assess predictions for sold properties just before this information influenced the models, which is the key to isolating confirmation bias. While the concept may seem straightforward, the execution is anything but. PTM™ navigates a complex web of factors to ensure a level playing field for all models involved, setting a new standard for AVM testing.

So, how do we restore confidence in AVMs? With PTM™, we’re enabling accurate AVM testing, which in turn paves the way for more accurate property valuations. Those, in turn, empower stakeholders to make informed decisions with confidence. Join us in revolutionizing AVM testing and moving into the future of improved property valuation accuracy. Together, we can unlock new possibilities and drive meaningful change in the industry.

1The majority of the commercially available AVMs support this testing methodology, and there is over two solid years of testing that has been conducted for over 25 models.