The AVMNews sat down with our publisher Lee Kennedy to discuss trends in the industry.

The AVMNews sat down with our publisher Lee Kennedy to discuss trends in the industry.

AVMNews: Lee, as the Managing Director at AVMetrics, you’re sitting at the center of the Automated Valuation Model (AVM) industry. What changes have you seen recently?

Lee: There’s a lot going on. We see firsthand how the evolution of the technology has affected the sector dramatically. The availability of data and the decline in costs of storage and computing power have opened the doors to new competition. We see new entrants using new techniques and built by fresh faces. We still have a number of large players offering well-established AVMs. But, we also see the larger players retiring some of their older models. The established AVM players have responded in some cases by raising their game, and in other cases, by buying their upstart rivals. So, we’ve seen increased competition and increased consolidation at the same time.

And, it’s true that the tools keep getting better. It’s not evenly distributed, but on average they continue to do a better and better job.

AVMNews: In what ways do AVMs continue to get better?

Lee: AVMetrics has been conducting contemporaneous AVM testing for over a decade now, and we have many quantitative metrics showing how much better AVMs are getting. Specifically, we run statistical analysis around the comparison of AVM estimates to sales prices that are unknown to the models. We have seen increases in model accuracy rates measured by percentage of predicted error (PPE), mean absolute error (MAE) and a host of other metrics. Models are getting better at predicting sale prices and when they miss, they don’t miss by as much as they used to.

AVMNews: What about on the regulatory side?

Lee: There is always a lot going on. The regulatory environment has eased in the last two years reflecting a whole new attitude in Washington, D.C. – one that is more open to input and more interested in streamlining. Take, for instance, the 2018 Treasury report that focuses on advancing technologies (See “A Financial System That Creates Economic Opportunities”).

Last November, I was at a key stakeholder forum for the Appraisal Subcommittee (ASC). One area of focus was harmonizing appraisal requirements across agencies. Another major focus was how to effectively employ new tools in support of the appraisal industry, including the growth of Alternative Valuation Products that utilize AVMs.

AVMNews: I know that you also wrote a letter to the Federal Finance Institutions Examination Council (FFIEC) about raising the de minimis threshold, below which some lending guidelines would NOT require an appraisal. This year in July they elected to change the de minimus threshold from $250,000 to $400,000 for residential housing. What are your thoughts?

Lee: Well, I think that the question everyone is struggling with is “What does the future hold for appraisers and AVMs?” Obviously, the field of appraisers is shrinking, and AVMs are economical, faster and improving. How is this going to play out?

First, my strong feeling is that appraisers are a valuable and limited resource, and we need to employ them at their highest and best use. Trying to be a “manual AVM” is not their highest and best use. Their expertise should be focused on the qualitative aspects of the valuation process such as condition, market and locational influences, not the quantitative (facts) such as bed and bath counts. Models do not capture and analyze the qualitative aspects of a property very well.

Several companies are developing ways of merging the robust data processing capabilities of an AVM with the qualitative assessment skills of appraisers. Today, these products typically use an AVM at their core and then satisfy additional FFIEC evaluation criteria (physical property condition, market and location influences) with an additional service. For example, the lender can wrap a Property Condition Report (PCR) around the AVM and reconcile that data in support of a Home Equity Line of Credit (HELOC) lending decision. This type of hybrid product offering is on the track that we’re headed down. Many AMCs and software developers have already created these types of products for proprietary use or for use on multiple platforms.

AVMNews: AVMs were supposed to take over the world. Can you tell us what happened?

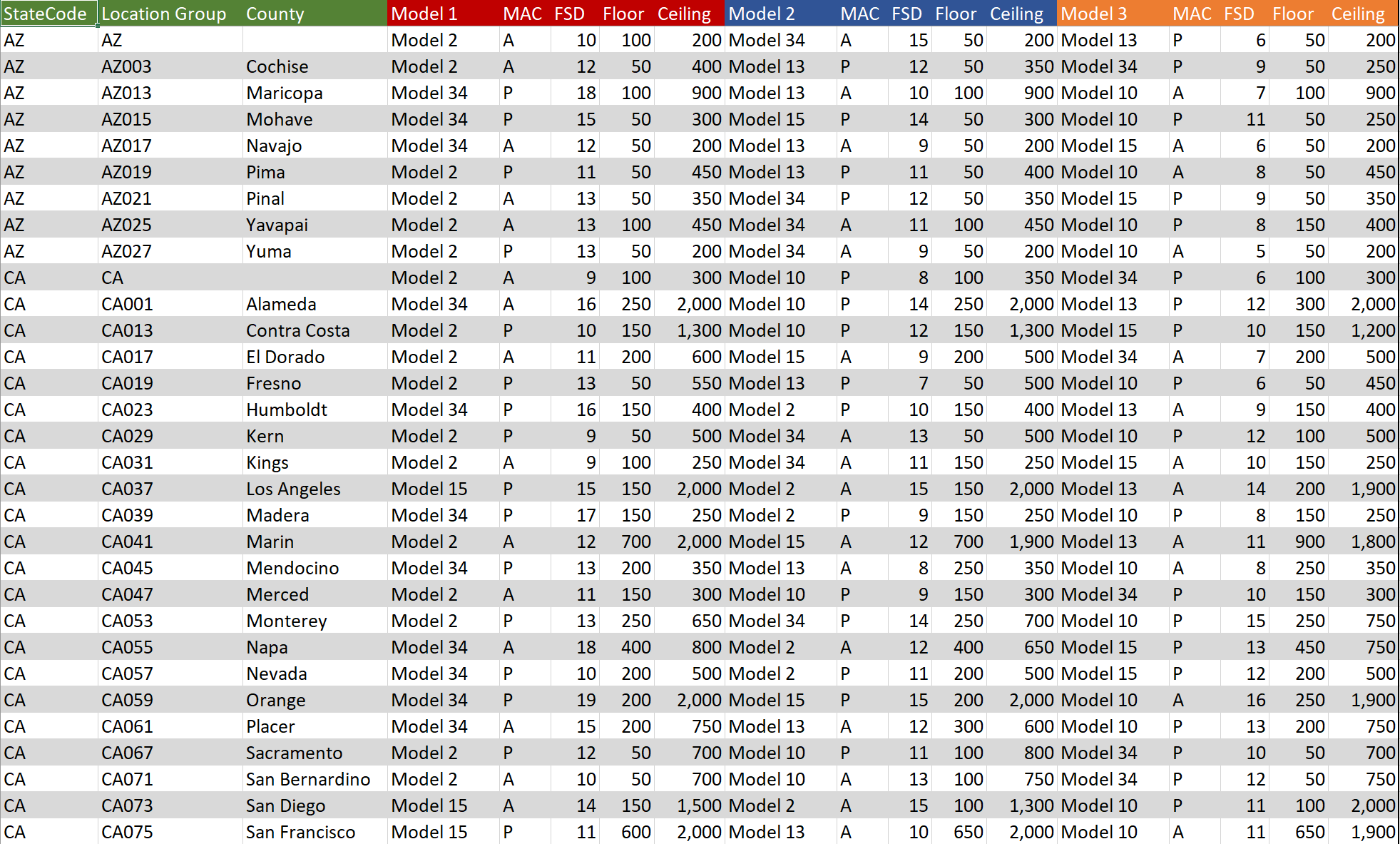

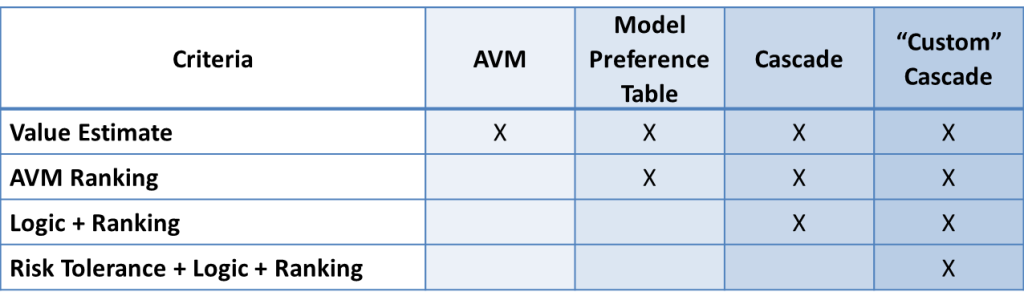

Lee: Well, the Financial Crisis is one thing that happened. Lawsuits ensued, and everyone got a lot more conservative. And, the success of AVMs developed into hype that was obviously unrealistic. But, AVMs are starting to gain traction again. We are answering a lot more calls from lenders who want help implementing AVMs in their origination processes. They typically need our help with policies and procedures to stay on the right side of the Office of the Comptroller of the Currency (OCC) regulations, and so in the last year, we’ve done training at several banks.

Everyone is quick to point out that AVMs are not infallible, but AVMs are pretty incredible tools when you consider their speed, accuracy, cost and scalability. And, they are getting more impressive. Behind the curtain the models are using neural networks and machine learning algorithms. Some use creative techniques to adjust prices conditionally in response to situational or temporary conditions. We test them and talk to their developers, and we can see how that creativity translates into improved performance.

AVMNews: You consult to litigants about the use of AVMs in lawsuits. How do you think legal decisions and risk will affect the use of AVMs?

Lee: This is an area of our business, litigation support, where I am restricted from saying very much. It has been and continues to be an enlightening experience as some of the best minds are involved in all aspects of collateral valuation and the “Experts” are truly that… experts in their fields as econometricians, statisticians, appraisers, modelers, etc.… It is also very interesting with over 50 cases behind us now, to get a look behind the legal system curtain and how all of that works. Therefore, I want to emphasize that my comments for our interview are in the context of contemporaneous AVMs that were tested during the time period shown here and not a retrospective AVM that was looking back to these time periods.

AVMNews: AVMetrics now publishes the AVM News – how did that come about?

Lee: As you and the many subscribers know, Perry Minus of Wells Fargo started that publication as a labor of love over a decade ago. When he retired recently, he asked if I would take over as the publisher. We were honored to be trusted with his creation, and we see it as a way to be good citizens and contribute to the industry as a whole.

AVMNews: I encourage anyone interested in receiving the quarterly newsletter for free to go to http://eepurl.com/cni8Db

The AVMNews is a quarterly newsletter that is a compilation of interesting and noteworthy articles, news items and press releases that are relevant to the AVM industry. Published by AVMetrics, the AVMNews endeavors to educate the industry and share knowledge about Automated Valuation Models for the betterment of everyone involved.